Categories

- AngularJS Development

- Awards

- Business

- Content Marketing

- Digital Marketing

- Ecommerce Development

- Email Marketing

- Magento

- Microsoft 365

- Mobile App Development

- Mobile Optimization

- MongoDB

- Node.js

- Online Marketing

- Search Engine Optimization

- Shopify

- Social Media Marketing

- Web Development

- Website Design

- Website Maintenance

- WordPress Websites

Download Our Digital Marketing Ebook

Download Our Digital Marketing Ebook

Managing a recycling operation at scale is a huge technical challenge. Our client operates over 50 trucks and 1,000 bins across the province. Every second, those assets send back constant data: GPS coordinates, weights, timestamps, and sensor health. It is a lot of information to process in real time.

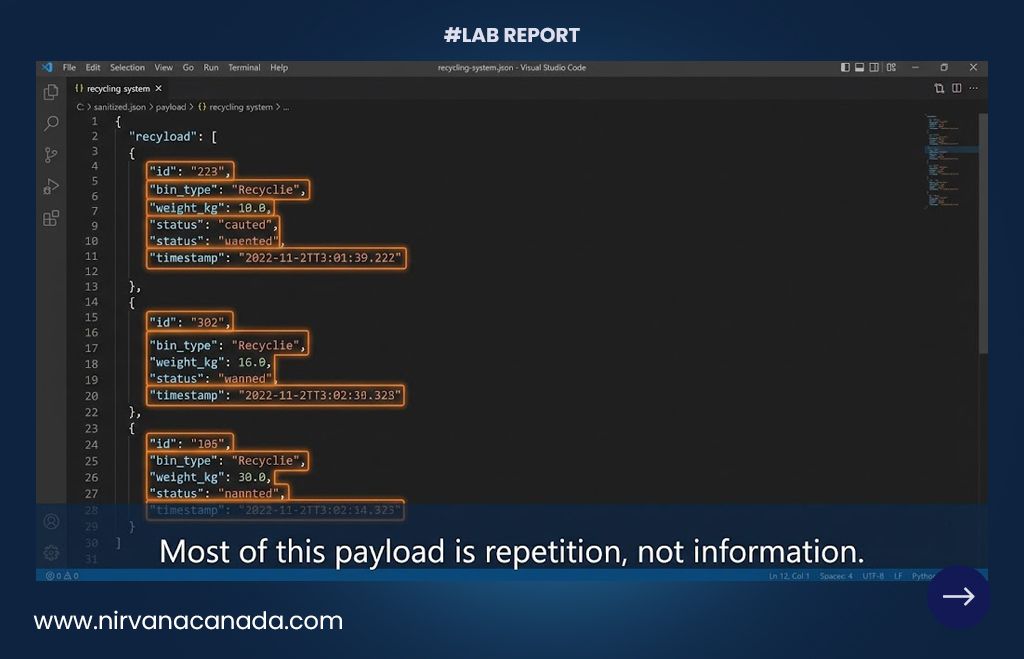

When we looked at their legacy system, it was struggling with “bloat.” Every time a truck picked up a bin, the system sent a massive packet of data. We found that roughly 60% of that packet was redundant information that the system already knew.

The Real Cost of Data Bloat

In 2026, bandwidth and server power are expensive. When you send unnecessary data millions of times a day, those costs add up quickly.

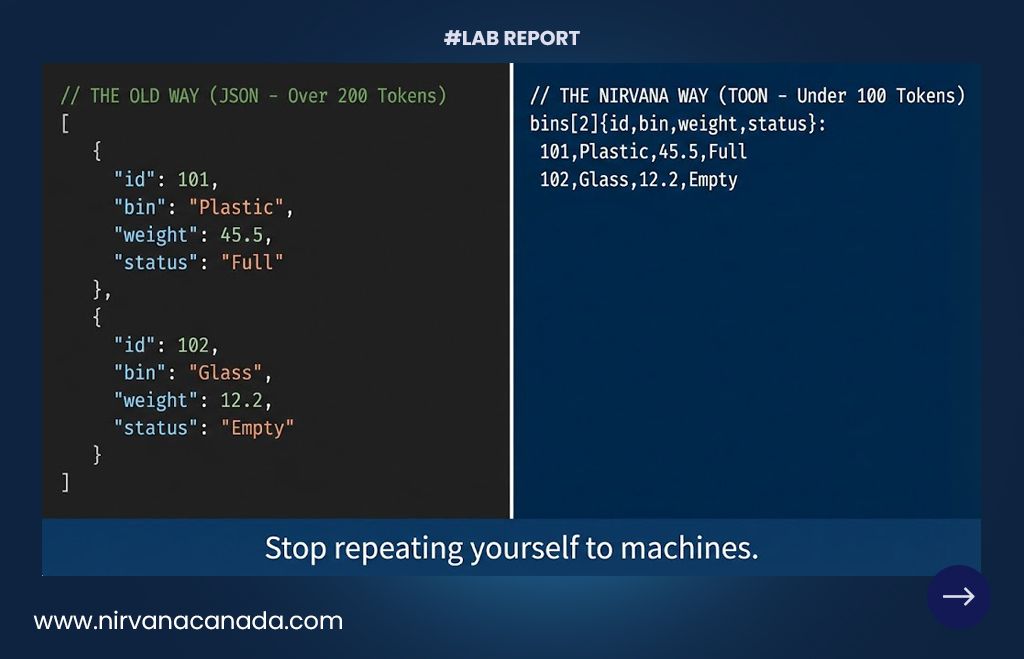

Looking at the server logs, we saw that we were spending a lot on compute power just to process simple messages like “this bin is full.” Instead of suggesting more expensive servers, we decided to fix the underlying logic. We took the problem into our lab and transitioned the system to TOON (Token-Oriented Object Notation).

The Roadmap: Moving Toward Efficiency

Step 1: Auditing the Data Exhaust

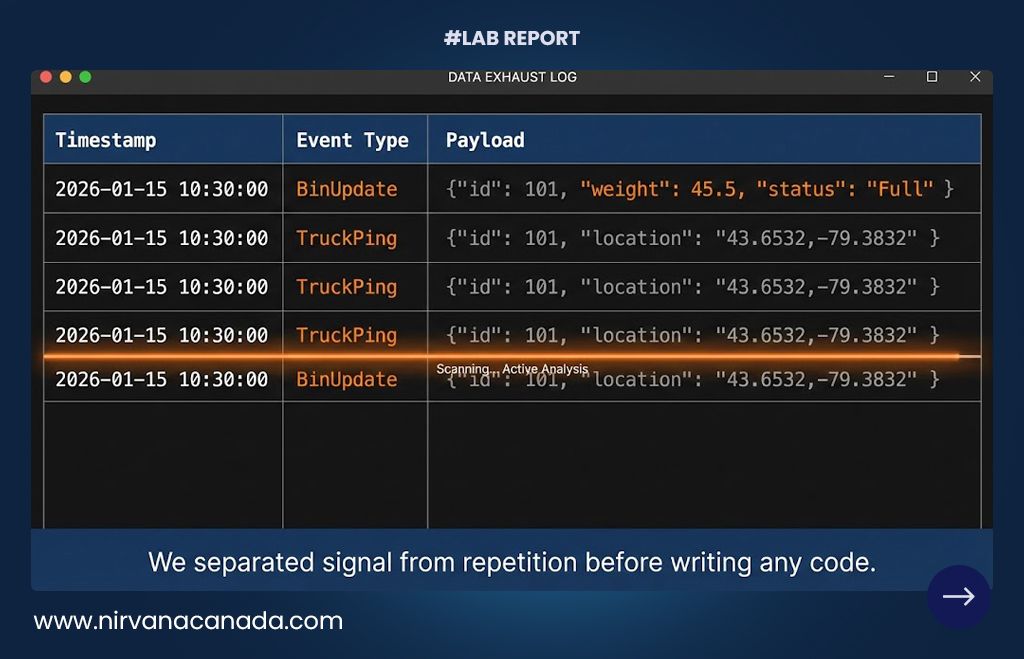

We started by logging every single data payload for 72 hours. We mapped out exactly which fields were changing and which ones were being resent needlessly just to satisfy the old JSON format.

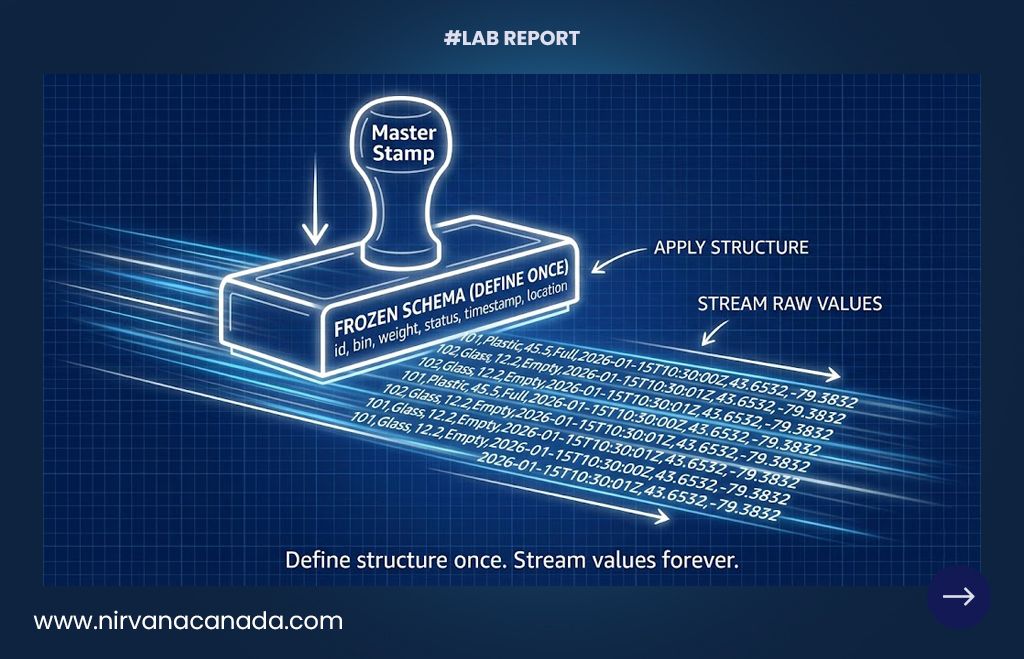

Step 2: Defining a Data Contract

We identified a set of “Stable Structures” - information like IDs and locations that stay consistent. We defined these once as a “contract” so the system doesn’t have to keep repeating the labels every few seconds.

Step 3: Streaming Raw Values

By using TOON, we stopped sending key-value pairs. We send the structure once at the start, and from then on, we only stream the raw, high-velocity data values. This keeps the messages as small as possible.

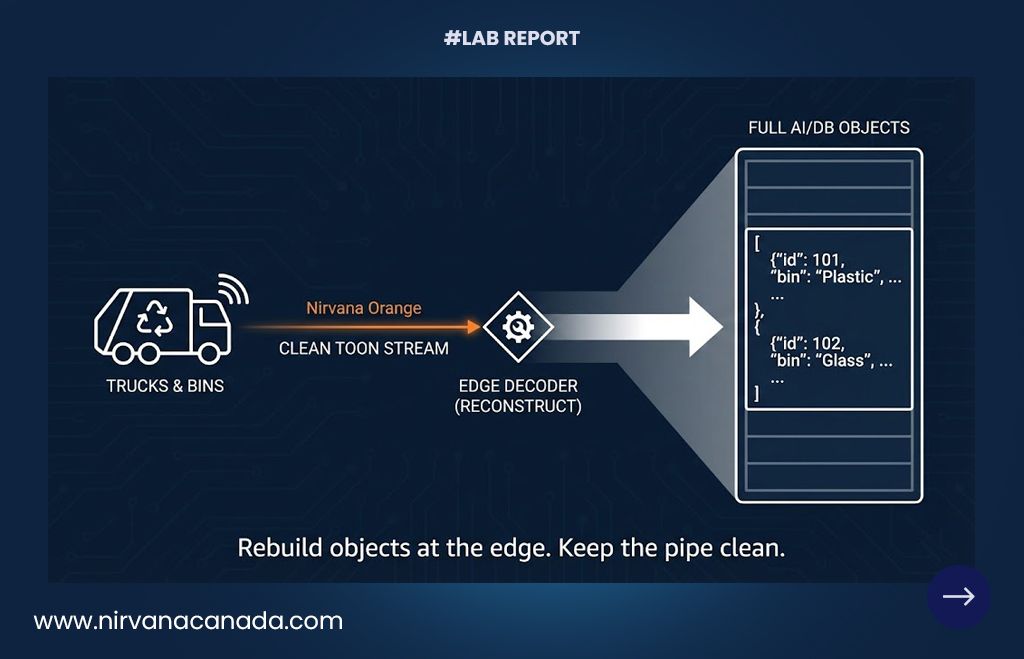

Step 4: Decoding at the Edge

To keep things compatible with AI and existing databases, we added a lightweight decoder. It reconstructs the data in memory instantly. This removes the “bloat” without adding any noticeable lag.

Step 5: Event-Based Tracking

Finally, we stopped sending “status snapshots.” Instead of the system constantly reporting that a bin is empty, it now only pings the server when a change occurs—like a bin moving from “Empty” to “Full.”

The Results

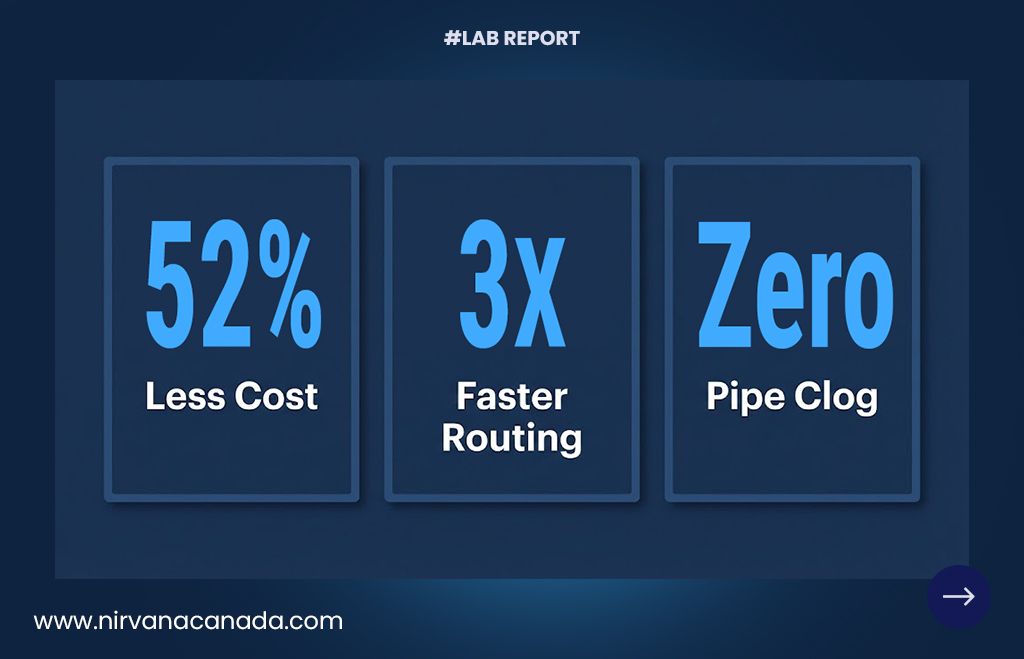

By prioritizing better logic over more hardware, we saw immediate improvements:

• 52% reduction in monthly data costs.

• 3× faster AI reasoning for truck routing (less data to parse means the AI responds quicker).

• Zero lag across the entire network.

I’m proud of what the team built here. It would have been easy to just pass an infrastructure bill to the client, but that isn’t our style. We would much rather re-engineer a system to remove waste - whether that’s digital bloat or the physical materials our clients manage every day.

If your current tech setup feels slow or expensive, let’s talk about how we can streamline your data.